Number of Trials to First Success

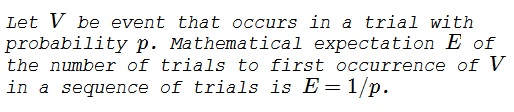

Informally, the probability of an event is the average number of times the event occurs in a sequence of trials. Another way of looking at that is to ask for an average number of trials before the first occurrence of the event. This could be formalized in terms of mathematical expectation.

Proof

We shall call an occurrence of \(V\) in a trial a success; a trial is a failure otherwise.

The event will occur on the first trial with probability \(p\). If that fails, it will occur on the second trial with probability \((1-p)p\). If that also fails, the probability of the event coming up on the third trial is \((1-p)^{2}p\).

More generally, the probability of the first success on the \(n\)th trial is \((1-p)^{n-1}p\). We are interested in the expected value:

\(E = p + 2(1-p)p + 3(1-p)^{2}p + \ldots + n(1-p)^{n-1}p + \ldots\)

One way to determine \(E\) is to use a generating function:

\(f(x) = p + 2(1-p)px + 3(1-p)^{2}px^{2} + \ldots + n(1-p)^{n-1}px^{n-1} + \ldots\)

By the definition, \(E = f(1)\). (Note that, since, the series converges for \(x=1\), \(f(1)\) does exist.) To find \(f\) in a closed form, we first integrate term-by-term and then differentiate the resulting function.

\(\displaystyle \begin{align} F(x) = \int{f(x)dx} &= \int\sum_{n=0}(n+1)(1-p)^{n}px^{n}dx \\ &= \sum_{n=0}(1-p)^{n}p\int{(n+1)x^{n}dx} \\ &= \sum_{n=0}(1-p)^{n}px^{n+1} + C \\ &= \frac{p}{1-p}\sum_{n=0}(1-p)^{n+1}x^{n+1} + C \\ &= \frac{p}{1-p}\frac{(1-p)x}{1 - (1-p)x} + C \\ &= \frac{px}{1 - (1-p)x} + C. \end{align} \)

Now differentiate:

\(\displaystyle f(x) = F'(x) = \frac{p}{(1-(1-p)x)^{2}}\)

from which \(\displaystyle f(1)=\frac{p}{p^{2}}=\frac{1}{p}\), as required.

Shortcut

There is a shortcut that makes the finding of \(E\) more transparent. Obviously, the expectation of the first success counting from the second trial is still \(E\). Taking into account the first trial, we can say that with probability \(1-p\) the expected number of trials to the first success is \(E+1\), while it is just \( 1 \) with probability \( p \). This leads to a simple equation:

\(E = p + (1-p)(E+1) = 1 + E(1-p).\)

Solving this equation for \(E\) gives \(\displaystyle E=\frac{1}{p}\).

This derivation could be done more formally (the case of \(\displaystyle p=\frac{1}{2}\) was treated separately elsewhere):

\(\displaystyle \begin{align} E &= p\sum_{n=0}(n+1)(1-p)^{n} \\ &= p\sum_{n=0}n(1-p)^{n} + p\sum_{n=0}(1-p)^{n} \\ &= p\sum_{n=1}n(1-p)^{n} + p\cdot \frac{1}{1-(1-p)} \\ &= (1-p)p\sum_{n=0}(n+1)(1-p)^{n} + 1 \\ &= (1-p)E + 1. \end{align} \)

- What Is Probability?

- Intuitive Probability

- Probability Problems

- Sample Spaces and Random Variables

- Probabilities

- Conditional Probability

- Dependent and Independent Events

- Algebra of Random Variables

- Expectation

- Admittance to a Tennis Club

- Average Number of Runs

- Number of Trials to First Success

- Probability Generating Functions

- Probability of Two Integers Being Coprime

- Random Walks

- Probabilistic Method

- Probability Paradoxes

- Symmetry Principle in Probability

- Non-transitive Dice

|Contact| |Front page| |Contents| |Probability|

Copyright © 1996-2018 Alexander Bogomolny

73765902